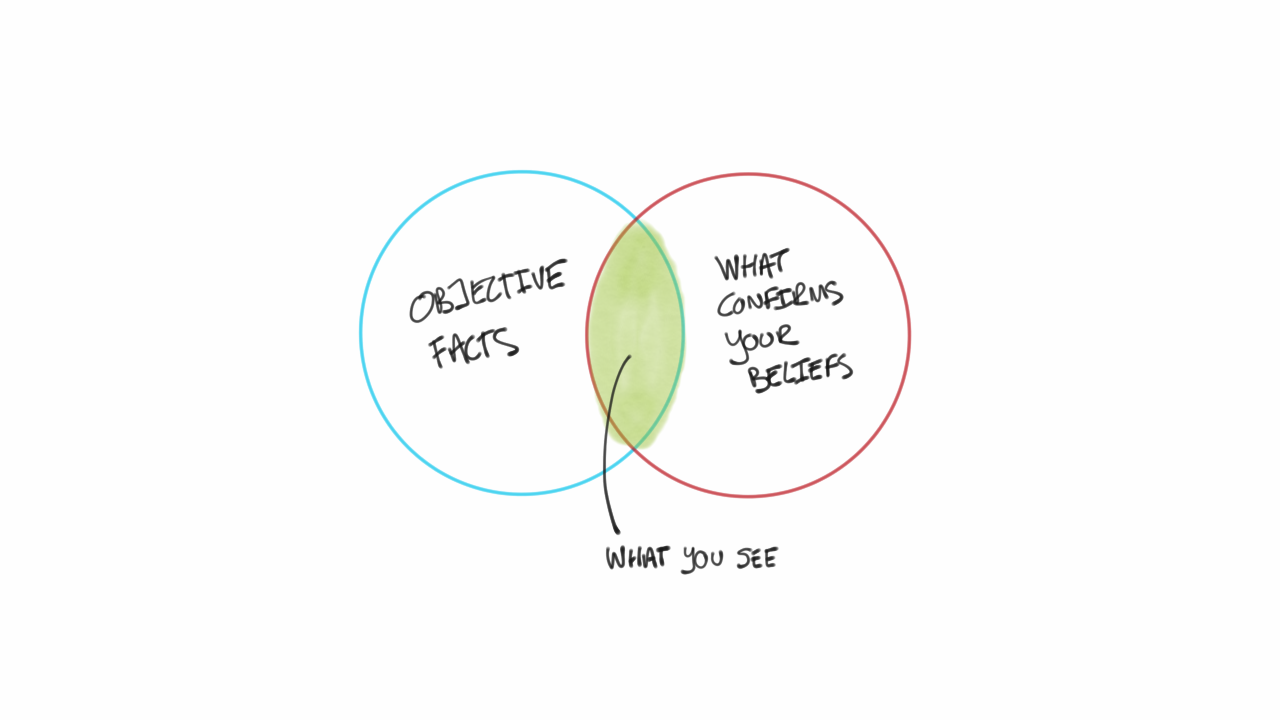

Confirmation bias is our tendency to cherry-pick information that confirms our existing beliefs or ideas. Confirmation bias explains why two people with opposing views on a topic can see the same evidence and come away feeling validated by it. This cognitive bias is most pronounced in the case of ingrained, ideological, or emotionally charged views.

Failing to interpret information in an unbiased way can lead to serious misjudgments. By understanding this, we can learn to identify it in ourselves and others. We can be cautious of data that seems to immediately support our views.

When we feel as if others “cannot see sense,” a grasp of how confirmation bias works can enable us to understand why. Willard V. Quine and J.S. Ullian described this bias in The Web of Belief as such:

The desire to be right and the desire to have been right are two desires, and the sooner we separate them the better off we are. The desire to be right is the thirst for truth. On all counts, both practical and theoretical, there is nothing but good to be said for it. The desire to have been right, on the other hand, is the pride that goeth before a fall. It stands in the way of our seeing we were wrong, and thus blocks the progress of our knowledge.

Experimentation beginning in the 1960s revealed our tendency to confirm existing beliefs, rather than questioning them or seeking new ones. Other research has revealed our single-minded need to enforce ideas.

“What the human being is best at doing is interpreting all new information so that their prior conclusions remain intact.”— Warren Buffett

Like many mental models, confirmation bias was first identified by the ancient Greeks. In The History of the Peloponnesian War, Thucydides described this tendency as such:

For it is a habit of humanity to entrust to careless hope what they long for, and to use sovereign reason to thrust aside what they do not fancy.

Our use of this cognitive shortcut is understandable. Evaluating evidence (especially when it is complicated or unclear) requires a great deal of mental energy. Our brains prefer to take shortcuts. This saves the time needed to make decisions, especially when we’re under pressure. As many evolutionary scientists have pointed out, our minds are unequipped to handle the modern world. For most of human history, people experienced very little new information during their lifetimes. Decisions tended to be survival based. Now, we are constantly receiving new information and have to make numerous complex choices each day. To stave off overwhelm, we have a natural tendency to take shortcuts.

In “The Case for Motivated Reasoning,” Ziva Kunda wrote, “we give special weight to information that allows us to come to the conclusion we want to reach.” Accepting information that confirms our beliefs is easy and requires little mental energy. Contradicting information causes us to shy away, grasping for a reason to discard it.

In The Little Book of Stupidity, Sia Mohajer wrote:

The confirmation bias is so fundamental to your development and your reality that you might not even realize it is happening. We look for evidence that supports our beliefs and opinions about the world but excludes those that run contrary to our own… In an attempt to simplify the world and make it conform to our expectations, we have been blessed with the gift of cognitive biases.

“The human understanding when it has once adopted an opinion, draws all things else to support and agree with it. And though there be a greater number and weight of instances to be found on the other side, yet these it either neglects and despises, or else by some distinction sets aside and rejects.”— Francis Bacon

How Confirmation Bias Clouds Our Judgment

The complexity of confirmation bias arises partly from the fact that it is impossible to overcome it without an awareness of the concept. Even when shown evidence to contradict a biased view, we may still interpret it in a manner that reinforces our current perspective.

In one Stanford study, half of the participants were in favor of capital punishment, and the other half were opposed to it. Both groups read details of the same two fictional studies. Half of the participants were told that one study supported the deterrent effect of capital punishment, and the other opposed it. The other participants read the opposite information. No matter, the majority of participants stuck to their original views, pointing to the data that supported it and discarding that which did not.

Confirmation bias clouds our judgment. It gives us a skewed view of information, even when it consists only of numerical figures. Understanding this cannot fail to transform a person’s worldview — or rather, our perspective on it. Lewis Carroll stated, “we are what we believe we are,” but it seems that the world is also what we believe it to be.

A poem by Shannon L. Alder illustrates this concept:

Read it with sorrow and you will feel hate.

Read it with anger and you will feel vengeful.

Read it with paranoia and you will feel confusion.

Read it with empathy and you will feel compassion.

Read it with love and you will feel flattery.

Read it with hope and you will feel positive.

Read it with humor and you will feel joy.

Read it without bias and you will feel peace.

Do not read it at all and you will not feel a thing.

Confirmation bias is somewhat linked to our memories (similar to availability bias). We have a penchant for recalling evidence that backs up our beliefs. However neutral the original information was, we fall prey to selective recall. As Leo Tolstoy wrote:

The most difficult subjects can be explained to the most slow-witted man if he has not formed any idea of them already; but the simplest thing cannot be made clear to the most intelligent man if he is firmly persuaded that he knows already, without a shadow of doubt, what is laid before him.

“Beliefs can survive potent logical or empirical challenges. They can survive and even be bolstered by evidence that most uncommitted observers would agree logically demands some weakening of such beliefs. They can even survive the destruction of their original evidential bases.”— Lee Ross and Craig Anderson

Why We Ignore Contradicting Evidence

Why is it that we struggle to even acknowledge information that contradicts our views?

When first learning about the existence of confirmation bias, many people deny that they are affected. After all, most of us see ourselves as intelligent, rational people. So, how can our beliefs persevere even in the face of clear empirical evidence? Even when something is proven untrue, many entirely sane people continue to find ways to mitigate the subsequent cognitive dissonance.

Much of this is the result of our need for cognitive consistency. We are bombarded by information. It comes from other people, the media, our experience, and various other sources. Our minds must find means of encoding, storing, and retrieving the data we are exposed to. One way we do this is by developing cognitive shortcuts and models. These can be either useful or unhelpful.

Confirmation bias is one of the less-helpful heuristics which exists as a result. The information that we interpret is influenced by existing beliefs, meaning we are more likely to recall it. As a consequence, we tend to see more evidence that enforces our worldview. Confirmatory data is taken seriously, while disconfirming data is treated with skepticism. Our general assimilation of information is subject to deep bias.

Constantly evaluating our worldview is exhausting, so we prefer to strengthen it instead. Plus holding different ideas in our head is hard work. It’s much easier to just focus on one.

We ignore contradictory evidence because it is so unpalatable for our brains. According to research by Jennifer Lerner and Philip Tetlock, we are motivated to think critically only when held accountable by others. If we are expected to justify our beliefs, feelings, and behaviors to others, we are less likely to be biased towards confirmatory evidence. This is less out of a desire to be accurate, and more the result of wanting to avoid negative consequences or derision for being illogical. Ignoring evidence can be beneficial, such as when we side with the beliefs of others to avoid social alienation.

Examples of Confirmation Bias in Action

Creationists vs. Evolutionary Biologists

A prime example of confirmation bias can be seen in the clashes between creationists and evolutionary biologists. The latter use scientific evidence and experimentation to reveal the process of biological evolution over millions of years. The former see the Bible as being true in the literal sense and think the world is only a few thousand years old. Creationists are skilled at mitigating the cognitive dissonance caused by factual evidence that disproves their ideas. Many consider the non-empirical “evidence” for their beliefs (such as spiritual experiences and the existence of scripture) to be of greater value than the empirical evidence for evolution.

Evolutionary biologists have used fossil records to prove that the process of evolution has occurred over millions of years. Meanwhile, some creationists view the same fossils as planted by a god to test our beliefs. Others claim that fossils are proof of the global flood described in the Bible. They ignore evidence to contradict these conspiratorial ideas and instead use it to confirm what they already think.

Doomsayers

Take a walk through London on a busy day, and you are pretty much guaranteed to see a doomsayer on a street corner, ranting about the upcoming apocalypse. Return a while later and you will find them still there, announcing that the end has been postponed.

In When Prophecy Fails, Leon Festinger explained the phenomenon this way:

Suppose an individual believes something with his whole heart; suppose further that he has a commitment to this belief, that he has taken irrevocable actions because of it; finally, suppose that he is presented with evidence, unequivocal and undeniable evidence, that his belief is wrong: what will happen? The individual will frequently emerge, not only unshaken but even more convinced of the truth of his beliefs than ever before. Indeed, he may even show a new fervor about convincing and converting people to his view.

Music

Confirmation bias in music is interesting because it is actually part of why we enjoy it so much. According to Daniel Levitin, author of This Is Your Brain on Music:

As music unfolds, the brain constantly updates its estimates of when new beats will occur, and takes satisfaction in matching a mental beat with a real-in-the-world one.

Witness the way a group of teenagers will act when someone puts on “Wonderwall” by Oasis or “Creep” by Radiohead. Or how their parents react to “Starman” by Bowie or “Alone” by Heart. Or even their grandparents to “The Way You Look Tonight” by Sinatra or “Non, Je ne Regrette Rien” by Edith Piaf. The ability to predict each successive beat or syllable is intrinsically pleasurable. This is a case of confirmation bias serving us well. We learn to understand musical patterns and conventions, enjoying seeing them play out.

Homeopathy

The multibillion-dollar homeopathy industry is an example of mass confirmation bias.

Homeopathy was invented by Jacques Benveniste, a French researcher studying histamines. Benveniste became convinced that as a solution of histamines was diluted, the effectiveness increased due to what he termed “water memories.” Test results were performed without blinding, leading to a placebo effect.

Benveniste was so certain of his hypothesis that he found data to confirm it and ignored that which did not. Other researchers repeated his experiments with appropriate blinding and proved Benveniste’s results to have been false. Many of the people who worked with him withdrew from science as a result.

Yet homeopathy supporters have only grown in numbers. Supporters cling to any evidence to support homeopathy while ignoring that which does not.

“One of the biggest problems with the world today is that we have large groups of people who will accept whatever they hear on the grapevine, just because it suits their worldview—not because it is actually true or because they have evidence to support it. The striking thing is that it would not take much effort to establish validity in most of these cases… but people prefer reassurance to research.”— Neil deGrasse Tyson

Scientific Experiments

In good scientific experiments, researchers should seek to falsify their hypotheses, not to confirm them. Unfortunately, this is not always the case (as shown by homeopathy). There are many cases of scientists interpreting data in a biased manner, or repeating experiments until they achieve the desired result. Confirmation bias also comes into play when scientists peer-review studies. They tend to give positive reviews of studies that confirm their views and of studies accepted by the scientific community.

This is problematic. Inadequate research programs can continue past the point where the evidence points to a false hypothesis. Confirmation bias wastes a huge amount of time and funding. We must not take science at face value and must be aware of the role of biased reporting.

“The eye sees only what the mind is prepared to comprehend.”— Robertson Davies

Conclusion

This article can provide an opportunity for you to assess how confirmation bias affects you. Consider looking back over the previous paragraphs and asking:

- Which parts did I automatically agree with?

- Which parts did I ignore or skim over without realizing?

- How did I react to the points which I agreed or disagreed with?

- Did this post confirm any ideas I already had? Why?

- What if I thought the opposite of those ideas?

Being cognizant of confirmation is not easy, but with practice, it is possible to recognize the role it plays in the way we interpret information. You need to search out disconfirming evidence.

As Rebecca Goldstein wrote in Incompleteness: The Proof and Paradox of Kurt Godel:

All truths — even those that had seemed so certain as to be immune to the very possibility of revision — are essentially manufactured. Indeed, the very notion of the objectively true is a socially constructed myth. Our knowing minds are not embedded in truth. Rather, the entire notion of truth is embedded in our minds, which are themselves the unwitting lackeys of organizational forms of influence.

To learn more about confirmation bias, read The Little Book of Stupidity or The Black Swan. Be sure to check out our entire latticework of mental models.